Deepfakes and AI-generated misinformation pose serious brand risks. By combining detection tools, crisis response planning, stakeholder education, legal measures, and proactive strategies, organizations can safeguard reputation, maintain trust, and navigate the evolving landscape of synthetic media effectively.

In today’s digital landscape, the rise of AI-driven deepfake technology poses a serious threat to brand reputation. Malicious actors can generate realistic audio, video, or image content that impersonates key spokespeople, spreads false statements, and undermines consumer trust. For businesses and organizations, the challenge is no longer limited to managing negative reviews or social media backlash. It now extends to detecting, countering, and recovering from AI-generated misinformation that can go viral in minutes.

This comprehensive guide explores how you can safeguard your brand against deepfakes and AI misinformation. You’ll learn detection techniques, monitoring tools, response planning, stakeholder education, legal measures, and proactive best practices to preserve your online reputation in the age of synthetic media.

Understanding Deepfakes and AI Misinformation

Deepfakes leverage generative adversarial networks (GANs) and other machine learning models to create hyper-realistic images, videos, or voice recordings that appear authentic. These manipulated assets can show a public figure saying things they never said or endorsing products they never used. As generative AI platforms become more accessible, the barrier to entry for creating convincing fakes has dropped dramatically.

Key characteristics of deepfakes include subtle facial distortions, audio artifacts, and uncanny valley effects. However, advances in AI are rapidly minimizing these telltale signs, making manual detection increasingly difficult. Misinformation campaigns often combine deepfakes with coordinated disinformation on social media to amplify reach and sow doubt among your audience.

The Role of AI-Generated Content in Brand Promotion

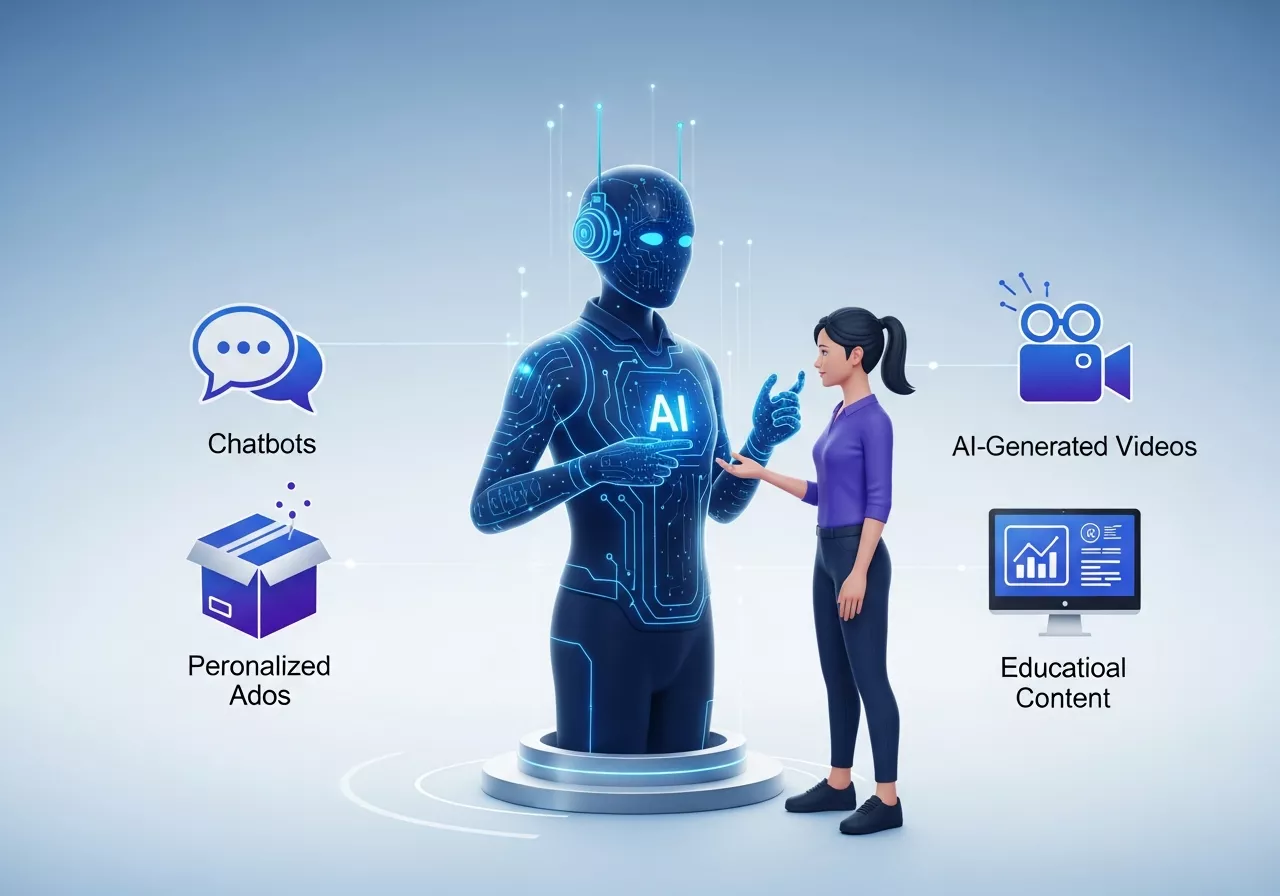

While AI-generated content can be a threat, it can also be an opportunity for brands to harness its potential for positive promotion. AI tools like deepfake technology and generative algorithms are not only used maliciously but can also be leveraged creatively for marketing, brand advocacy, and personalized experiences. Here’s how businesses can use synthetic media responsibly:

-

Creating Personalized Brand Content

Brands can use AI to generate personalized content, such as AI-based avatars or virtual influencers. This can help create authentic engagement with customers through customized marketing messages. -

Innovative Storytelling with AI

Using AI to generate hyper-realistic video ads or product demos can create impactful marketing campaigns. For example, companies can build digital twins of their spokespersons or create unique digital characters to represent their brand. -

Enhanced Customer Interactions

AI-generated chatbots and voice assistants powered by deepfake technology can offer personalized, human-like interactions on websites and social media channels, enhancing customer service experiences. -

Educational Content for Consumers

AI-generated videos and tutorials can help explain complex products or services in an easily digestible format, improving customer understanding and engagement.

However, ethical considerations and transparency must always guide the use of AI-generated content to ensure it doesn’t cross into deceptive or manipulative practices. Transparency with consumers about AI usage is key to avoiding backlash.

Potential Reputational Damage Caused by Deepfakes

The impact of a viral deepfake on your brand can be devastating. Consider the following scenarios:

-

False Statements: A deepfake video circulates showing your CEO making derogatory remarks about a customer segment. Negative sentiment spikes, leading to an influx of negative reviews online, and media outlets mistake it for legitimate content.

-

Product Discrediting: A manipulated image suggests your company knowingly sold defective products, triggering recalls, regulatory scrutiny, and a wave of negative reviews across e-commerce platforms and review sites.

-

Social Media Attack: Coordinated bot networks share a deepfake interview that undermines your brand values, leading to boycotts, hashtag campaigns, and a surge in negative reviews online.

In each case, delayed detection or inadequate response can magnify reputational harm, erode consumer trust, and result in significant revenue loss.

Monitoring and Detection Techniques

To defend against deepfakes, organizations must adopt robust monitoring and detection frameworks. Here are essential steps:

- AI-Powered Detection Tools: Invest in services that specialize in deepfake detection, using neural network analysis to identify anomalies in facial movements, voice intonation, and pixel-level inconsistencies.

- Reverse Image and Video Search: Regularly perform reverse searches on key media assets and public posts to find unauthorized derivatives or manipulated versions.

- Watermarking and Digital Fingerprinting: Embed invisible watermarks or metadata in official video and audio files to verify authenticity and trace alterations.

- Social Listening Platforms: Use reputation management tools to track mentions, sentiment spikes, and emerging chatter that may signal a deepfake campaign.

- Human Review Teams: Combine AI detection with trained specialists who can perform contextual analysis and escalate high-risk content for immediate action.

Building a Deepfake Response Plan

An effective crisis response plan equips your team to act swiftly when a deepfake emerges. Key components include:

- Incident Response Team: Define a cross-functional group with representatives from PR, Legal, IT, Security, and Executive Leadership.

- Escalation Protocols: Establish clear criteria for escalating suspicious content, including pre-set thresholds for reach and potential impact.

- Communication Templates: Prepare official statements, social media responses, and internal memos that can be rapidly customized and deployed.

- Authorized Verification Channels: Direct audiences to your verified website or social media handles for official confirmations, reducing misinformation spread.

- Post-Incident Review: After containment, conduct a thorough analysis to refine detection methods, update protocols, and strengthen defenses.

Implementing a Crisis Communication Strategy for Deepfake Attacks

In addition to detection and prevention, having a Crisis Communication Strategy is critical when managing deepfake attacks on your brand. A proactive and transparent communication strategy will minimize damage and restore consumer confidence quickly. This section should outline the essential elements of an effective crisis communication plan for deepfake attacks.

-

Immediate Action: Acknowledge and Clarify

As soon as a deepfake attack is detected, it’s essential to acknowledge the situation. Issue a statement on social media, website, and press releases explaining the situation, assuring stakeholders that the content is fake, and that you are investigating the matter. -

Assign a Crisis Communication Team

Your crisis communication team should include PR professionals, legal experts, senior leadership, and technical experts. Ensure this team is trained to handle a deepfake crisis, including how to communicate with the media, customers, and influencers. -

Use Authorized Communication Channels

To counter misinformation, always redirect people to verified sources. Encourage customers to refer to your official social media handles and website for updates. Establish clear lines of communication for anyone needing more information. -

Monitoring and Quick Response Time

Use monitoring tools to track the spread of the deepfake. A 24/7 monitoring system should be in place to detect any suspicious content or mentions of your brand across platforms, and the crisis team should be ready to act quickly. -

Transparency in Updates

Provide regular updates as the situation evolves. Offering transparency about what has been done and what is being done to resolve the issue builds trust with your audience and stakeholders.

Educating Stakeholders and Employees

Human error or unawareness can exacerbate a deepfake crisis. Training programs should cover:

- Recognizing Deepfakes: Teach employees to spot common signs, such as lip-sync mismatches, unnatural lighting, and audio distortions.

- Reporting Channels: Provide clear instructions for flagging suspicious content internally and externally without amplifying it inadvertently.

- Social Media Best Practices: Advise spokespeople on how to verify content before sharing and how to provide disclaimers when authenticity is uncertain.

- Executive Briefings: Keep leadership informed of evolving deepfake risks and the organization’s mitigation strategies.

Leveraging Legal and Policy Measures

Legal frameworks can help deter deepfake misuse and support takedown efforts:

- Digital Millennium Copyright Act (DMCA): Use DMCA takedown notices to remove unauthorized content hosted on compliant platforms.

- Terms of Service Enforcement: Work with social networks and video platforms to report violations of their community guidelines or terms of use.

- Defamation and Right of Publicity Claims: Consult legal counsel to assess whether deepfakes constitute defamation or unauthorized commercial use of likeness.

- Industry Coalitions: Join cross-sector alliances advocating for stronger regulations and shared best practices on synthetic media governance.

Collaborating with Platforms and Industry Leaders to Combat Deepfakes

Collaboration is one of the most effective ways to prevent deepfake attacks and combat misinformation. This section outlines how brands can work with social media platforms, industry leaders, and regulators to create a more resilient defense system against deepfakes.

-

Partnering with Tech Platforms

Tech platforms like Facebook, Twitter, YouTube, and Instagram are at the forefront of detecting and removing AI-generated misinformation. By working directly with these platforms, brands can have quicker access to content removal tools and reporting features. Partnering with these companies will help ensure prompt action is taken when deepfakes appear. -

Industry Collaboration and Information Sharing

Brands can join industry coalitions and collaborate with other companies facing similar challenges. Sharing threat intelligence and best practices can enhance the ability to detect and neutralize deepfake campaigns early. Industry coalitions can create common standards for identifying AI-manipulated media. -

Government and Regulatory Advocacy

Work with government agencies to help shape regulations that address AI-generated misinformation and deepfakes. Advocacy for AI content transparency laws and mandatory labeling for synthetic media can help provide clearer guidelines for brands and consumers alike. -

Engaging AI Research Communities

Collaborate with AI research communities to stay updated on the latest advances in deepfake detection and prevention. Supporting initiatives that aim to improve AI detection algorithms and promote ethical AI usage can be a step towards safeguarding your brand.

Table Suggestion: Platform Collaboration Overview

| Platform | Collaboration Opportunities | Benefits |

|---|---|---|

| Facebook/Instagram | Reporting fake content, proactive takedowns | Faster removal of malicious content |

| YouTube | Implementing AI-based detection tools | More effective content moderation |

| Creating guidelines for content authenticity | Reduced viral spread of misinformation | |

| Tech Companies | Collaborating on developing better detection tools | Improved security and content verification |

Best Practices for Proactive Protection

Beyond detection and response, adopt these proactive measures to reduce deepfake risks:

- Watermark Your Content: Embed visible and invisible watermarks in official videos and images to assert authenticity.

- Publish Verified Originals: Maintain a centralized media library of authorized assets accessible via secure APIs for partners and press.

- Use Multi-Factor Verification: Confirm identity through additional means (e.g., blockchain signatures or cryptographic hashes) for sensitive announcements.

- Collaborate with Platforms: Engage directly with social media and hosting services to flag AI-generated content at scale.

- Regular Audits: Conduct quarterly reviews of your digital footprint and detection workflows to stay ahead of evolving AI tactics.

Future Trends in AI Misinformation and ORM

As AI models grow more sophisticated, expect new challenges in reputation management:

- Real-Time Synthetic Media Generation: Attackers will deploy live deepfake streams in webinars or video calls, requiring instantaneous verification systems.

- Hyper-Personalized Misinformation: AI will craft targeted deepfakes leveraging personal data to manipulate key stakeholders or influencers.

- Regulatory Evolution: Governments and industry bodies will introduce stricter rules on AI content labeling and provenance tracking.

- Collaborative Defense Networks: Shared repositories of flagged media and verification protocols will emerge to speed cross-organizational responses.

Conclusion

Deepfakes and AI-generated misinformation represent the next frontier in online reputation threats. By combining advanced monitoring tools, a structured response plan, stakeholder education, legal measures, and proactive best practices, you can shield your brand from synthetic content attacks. Start by assessing your current detection capabilities, formalizing your crisis protocols, and training your teams. With a forward-looking ORM strategy tailored for deepfakes, you’ll maintain trust, credibility, and brand integrity in an era of relentless AI innovation.

FAQ: Guarding Your Brand Against Deepfakes and AI Misinformation

1. What exactly is a deepfake, and how can it affect my brand?

Answer: A deepfake is a type of synthetic media, such as video, audio, or images, created using artificial intelligence (AI) to manipulate real-life content. It can impersonate key figures in your company, making them appear to say or do things they didn’t, which can damage your brand’s reputation. For example, a deepfake video showing your CEO making offensive comments could lead to media backlash and loss of consumer trust.

2. How can I detect a deepfake targeting my brand?

Answer: Detection involves using AI-powered tools that analyze subtle facial distortions, voice anomalies, and pixel inconsistencies. You should also perform regular reverse image and video searches to find altered content, use social listening platforms to track rising mentions, and implement watermarking for your content to help verify authenticity. Combining automated tools with human oversight ensures quicker detection of threats.

3. Should I worry about deepfakes only on social media?

Answer: While social media is a major platform for deepfake dissemination, it’s important to monitor all digital spaces—websites, news outlets, and messaging platforms. Attackers may also target corporate communication channels or send deepfake content directly to key stakeholders. Therefore, it’s crucial to monitor all digital touchpoints where your brand’s image and reputation may be at risk.

4. How can I protect my brand from deepfake attacks proactively?

Answer: Implement proactive strategies like watermarking your media, conducting regular audits of your digital content, and maintaining a verified media library. Work closely with platforms to flag AI-generated content and consider using multi-factor identity verification for sensitive communications. These practices help mitigate risks by making it easier to identify and combat deepfakes early.

5. What should I do if a deepfake targeting my brand goes viral?

Answer: Act quickly by activating your crisis response plan. This should include clear escalation protocols, communication templates, and authorized verification channels. Issue a public statement via your verified social media accounts and direct audiences to your official website for clarification. Collaborate with platforms to take down the manipulated content, and monitor social channels to track the spread of misinformation.

6. Can legal measures help me protect my brand from deepfakes?

Answer: Yes, legal tools such as the Digital Millennium Copyright Act (DMCA) allow you to request the removal of unauthorized content from platforms. You may also have legal grounds for defamation or violations of the right of publicity, particularly if a deepfake misrepresents or damages your brand. Consult with legal experts to assess potential claims and support takedown efforts.

7. How can I educate my employees about deepfake risks?

Answer: Conduct training sessions to help employees recognize deepfakes by teaching them common signs, such as unnatural movements, mismatched lips, and distorted audio. Establish internal reporting channels so suspicious content can be flagged immediately. Additionally, encourage social media best practices, such as verifying content before sharing and being cautious of sharing unverified videos or images.

8. How can I collaborate with other organizations or platforms to tackle deepfakes?

Answer: Work with tech platforms, such as social media companies and video hosts, to establish clear channels for reporting and removing deepfakes. Join industry coalitions focused on combating synthetic media and stay informed about regulatory efforts to prevent deepfake misuse. Shared resources and collective defense mechanisms strengthen the overall industry response to AI-generated misinformation.

9. What steps can I take if I don’t have a big budget for deepfake detection and protection tools?

Answer: Even with limited resources, you can take several steps to safeguard your brand. Start by using free or low-cost AI detection tools, regularly monitor your media with reverse image and video searches, and collaborate with platforms and media outlets for content verification. Additionally, focusing on transparency and proactive communication with your audience can help mitigate the impact of any misinformation.

10. Will deepfake technology continue to improve? How can I stay ahead of emerging threats?

Answer: Yes, deepfake technology is evolving rapidly. As AI models become more sophisticated, the quality of synthetic media will improve, making detection more challenging. To stay ahead, continuously update your monitoring tools, stay informed about emerging detection technologies, and maintain an agile crisis response plan. Keep an eye on regulatory developments as governments may introduce new laws to govern synthetic media.

Learn more about: Harnessing Video Testimonials to Elevate Your Online Reputation